My master thesis was a great way to introduce myself into the field of telepresence and virtual headset control.

twas a great project. gonna write something about it in the future.

blog post, early development video, git source, document

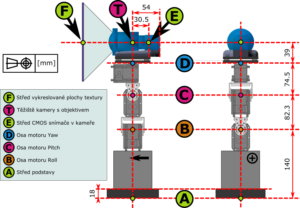

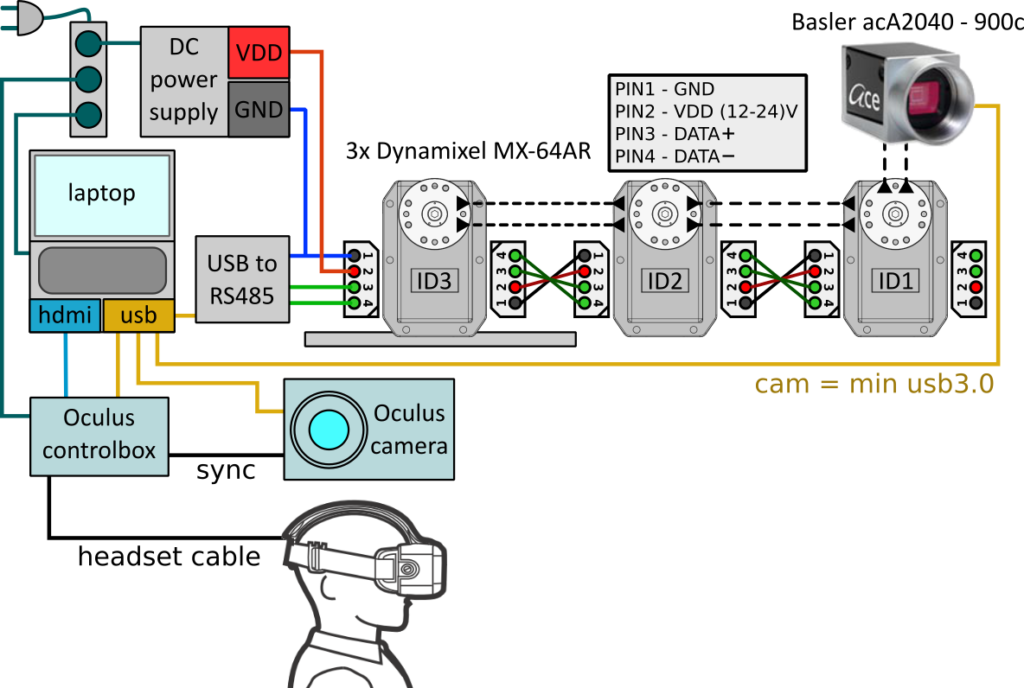

You can get the idea from the first two pictures. There was a very good camera on a robotic arm consisting of 3 servos. The pose of the arm was controlled by the Oculus Rift Dev2 headset via RS485.

The control program (second picture) shows the control gui and picture which was projected into the headset. The headset display shows what the camera sees, but it was not just displayed, it is in a 3D scene with position of simulated robotic hand position.

The control program (second picture) shows the control gui and picture which was projected into the headset. The headset display shows what the camera sees, but it was not just displayed, it is in a 3D scene with position of simulated robotic hand position.

Twas brilliant and semi-fast it was. The SDK was still version 0.6 and I was using C# wrapper as the robotic department stated it needs to be done in C#.

Twas brilliant and semi-fast it was. The SDK was still version 0.6 and I was using C# wrapper as the robotic department stated it needs to be done in C#.

I wanted to project the data from camera to the scene to have like static image history around you. in the scene when stopping the control loop and looking around not only in the direction of camera. = NOT DONE

I was not using the camera correction and was setting up the picture plane to the place in the virtual 3D scene, where I though it should be. But I placed the observer (user) in the virtual position of CCD camera sensor – not correcting the camera projection matrix. But, it was plausible I think.

Next is a set of pictures from the main document.

F:\BACKUP\EDUC\__DIP\text\_main_text